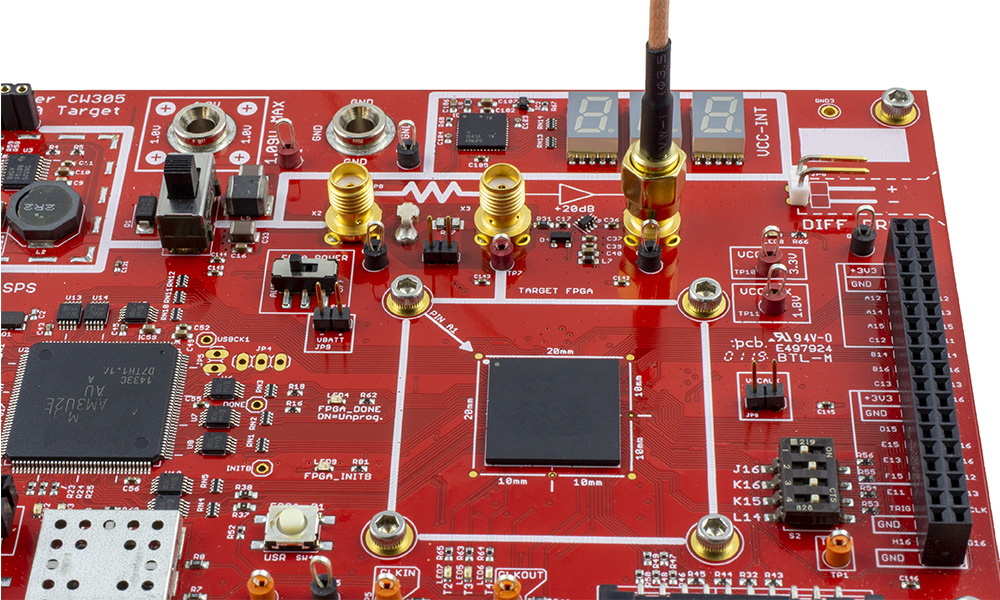

KeyLogic data scientists and embedded system software engineers are rewriting convolutional neural network (CNN) algorithms to operate smoothly and efficiently on field programmable-gate arrays (FPGAs). More than standard CPUs and even graphical processing units (GPUs), FPGAs have the capacity to massively accelerate deep learning algorithms on very low power, restricted weight, edge devices for computer vision and other ML/DL applications.

Our engineers understand the special challenges of these chips and the difficulties in migrating code from a CPU- or GPU-based development environment to an operational FPGA. Not only do we refactor the code, we select optimal FPGA models and optimize the application to achieve the best possible performance with the available resources. We build interfaces, as well as design test processes. We draw upon advanced mathematical techniques to convert floating-point calculations to fixed-point, quantized integer calculations without breaking the effectiveness of the machine learning models. Certainly not all machine learning algorithms need to be migrated to FPGAs; the costs and time to develop can be prohibitive for general purpose AI use. For more demanding applications, however, such as those requiring maximum acceleration, smallest size, lowest power, or lightest weight, FPGAs are the best solution. These applications include commercial Internet of Things (IoT) and industrial SCADA devices, front-line military and first responder equipment, drones in flight or at sea, and satellites on orbit.